Source: Art: DALL-E/OpenAI

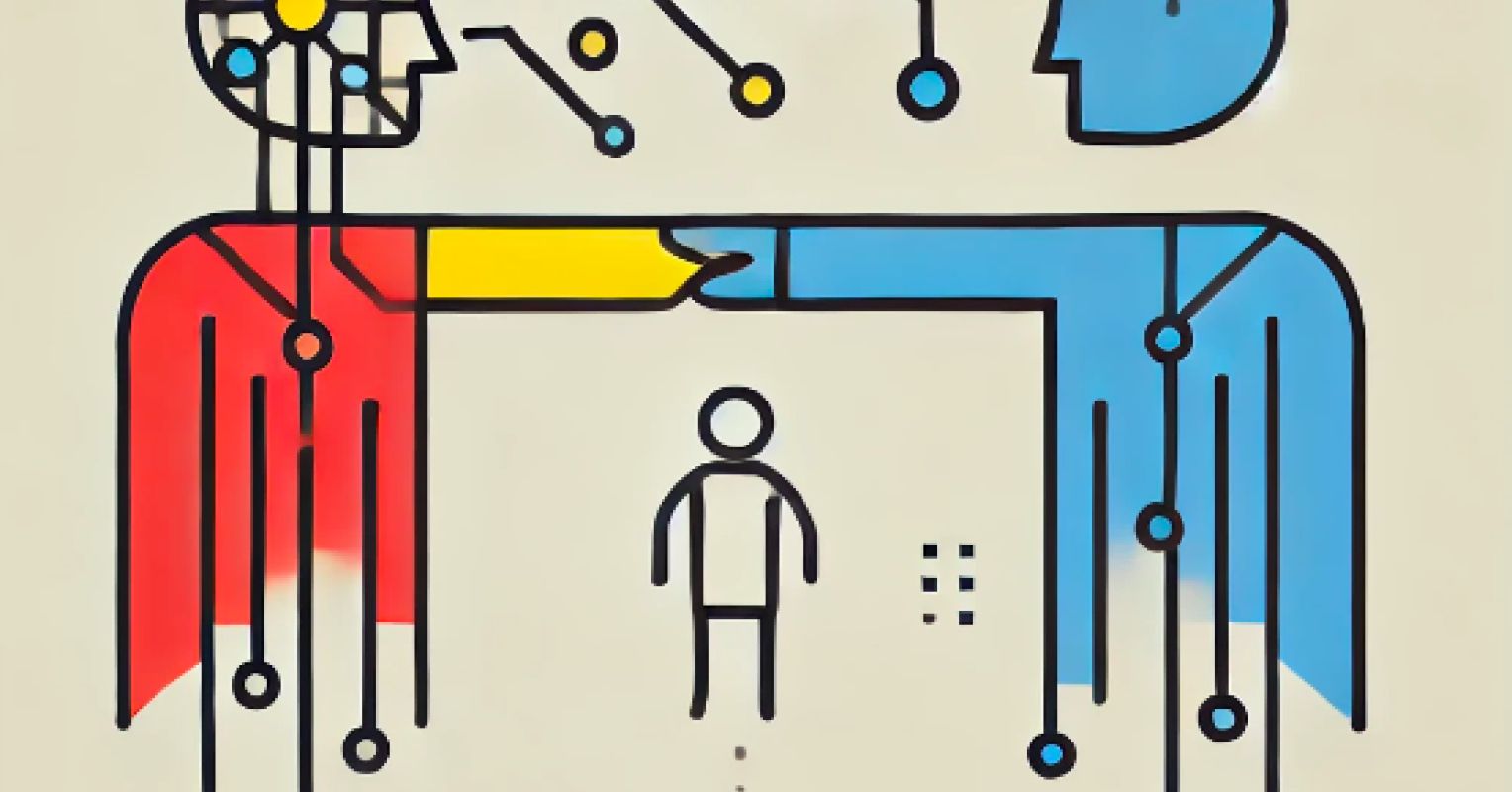

Today, we’re witnessing a powerful and curious transformation in our relationship with machines—a relationship increasingly defined by a unique and complex codependency. Large language models (LLMs), trained on vast amounts of human-generated text, are becoming an integral part of our cognitive processes. These models rely on us to “feed” them data, but in return, they offer us something both familiar and unprecedented—a stream of synthesized insights, solutions, and creative ideas. In essence, we are feeding the beast, and the beast is feeding us.

But this relationship is not as simple as input and output. Rather, it might represent a modern interdependence, a partnership that raises questions about autonomy, knowledge, and even the nature of intelligence itself. Just as interdependent human relationships can foster both growth and vulnerability, our partnership with LLMs holds similar promise and risk. Let’s take a closer look at what it means to depend on a machine for knowledge and insight, and how this new symbiosis might be reshaping the landscape of human cognition.

Feeding the Beast: Data as the Lifeblood of LLMs

Large language models are, at their core, reliant on the data we provide. Trained on billions of words from books, articles, conversations, and digital content, LLMs are an amalgamation of human thought, culture, and language. Without this data, they are just algorithms—potential energy waiting to be activated. It’s our collective knowledge and experience that breathes life into them, giving them the ability to understand context, generate responses, and even mimic creativity. And in some sense, to grow and evolve.

This interdependence on human data is profound. LLMs cannot think independently; they do not possess consciousness or intentionality in the human sense. Their power lies in patterns, in the complex web of associations and probabilities derived from human language. Each response they generate is a reflection of the data they’ve consumed—a mirror of our own collective intelligence. The process of “feeding the beast” is ongoing; as our knowledge grows and changes, so too must the data that train these models. In a sense, LLMs are like cognitive organisms whose survival and evolution are inextricably tied to the data we provide.

The Beast Feeds Us: Knowledge as Cognitive Fuel

In return for the data we provide, LLMs offer us something we haven’t had before—an entity that can not only store vast amounts of knowledge but also process and present it in ways that are immediately accessible, responsive, and often insightful. They can provide summaries, generate creative prompts, help solve problems, and even engage in thought-provoking dialogue. This capability is more than a technical feat; it is a cognitive boon, offering us a new way to think, learn, and create.

The knowledge that LLMs provide can be seen as a form of cognitive fuel or even a catalyst for thought itself. They allow us to expand our mental horizons, explore new perspectives, and accelerate the process of insight generation. In many ways, they serve as intellectual partners, capable of supporting us in tasks that were once limited to human interactions or even beyond the scope of human cognitive abilities. Yet, while this access to a vast well of synthesized knowledge is empowering, it also introduces a fascinating shift in our cognitive landscape. We are beginning to rely on these models not just as tools but as thinking partners, a shift that changes the dynamics of our intellectual independence.

The Cost of Interdependence

Yet, for the first time, technological innovation is challenging the primacy of human cognition, raising questions about the necessity of certain cognitive tasks that were once solely our domain. This shift has both practical and philosophical implications, signaling a moment in history where human thought may find itself sharing, or even ceding, aspects of its traditional role to machines. It’s a complex and sobering reality, urging us to reflect on what we preserve as uniquely human in a world where our cognitive contributions are no longer singular.

Symbiosis or Parasitism?

The ethical dimensions of this dependency add another layer of complexity. Feeding the beast is not a neutral act. The data we provide to train these models is a reflection of our society—its values, biases, and priorities. Consequently, the responses generated by LLMs mirror these same biases, often amplifying them in ways we might not foresee. This feedback loop creates an ethical tension: by feeding the beast, we are also shaping its view of the world, influencing how it interprets and responds to human users.

Moreover, the LLM’s “diet” is not chosen at random. Certain knowledge is privileged, while other perspectives may be marginalized or omitted entirely. This selective feeding creates a version of intelligence that is, in some ways, both an extension of and a departure from human thought. The risk is that we may come to see the LLM’s responses as neutral or objective when, in reality, they are deeply influenced by the biases and limitations of the data they consume.

Navigating an Interdependent Future

Our relationship with LLMs is still in its infancy, but it is already reshaping the way we think about knowledge, intelligence, and agency. This new dependency challenges us to reconsider what it means to be autonomous thinkers in a world where machines can mimic, enhance, and even shape our cognitive processes. It also prompts us to ask difficult questions about the balance between cognitive empowerment and cognitive erosion, and about the ethical implications of a machine that is both dependent on and influential over human thought.

In the end, the question may not be whether we can live with this new dependency but rather how we choose to navigate it. If we can find a way to feed the beast while preserving the strength of our cognitive faculties, we may unlock a future where humans and machines think together, amplifying one another’s potential in ways we have only begun to imagine.